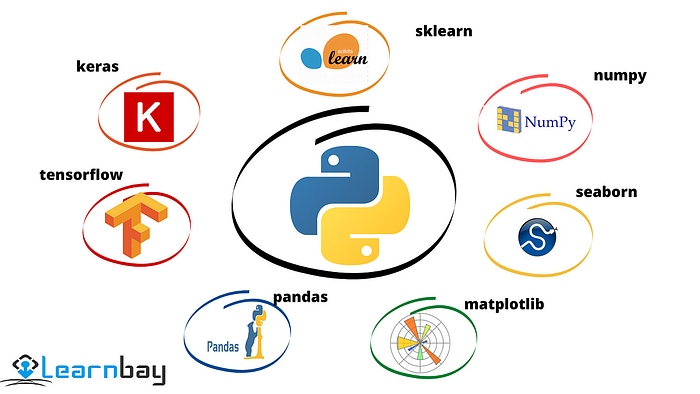

Python’s Data Alchemy: Mastering 10 Essential Libraries for Data Analysts

In the ever-evolving realm of data analysis, Python has emerged as a powerhouse, offering an extensive array of libraries that streamline tasks, enhance productivity, and unravel insights from complex datasets. As a data analyst, harnessing the capabilities of Python libraries can significantly elevate your proficiency and efficiency. In this article, we’ll delve into 10 indispensable Python libraries that every aspiring data analyst should acquaint themselves with.

1. Pandas: Your Data Manipulation Swiss Army Knife

Pandas is a versatile library for data manipulation and analysis, offering powerful data structures like DataFrames and Series. With Pandas, you can effortlessly handle data cleaning, filtering, aggregation, and more, making it an essential tool in any data analyst’s arsenal.

import pandas as pd

# Create a DataFrame

data = {'Name': ['Alice', 'Bob', 'Charlie'],

'Age': [25, 30, 35],

'Salary': [50000, 60000, 70000]}

df = pd.DataFrame(data)

# Display the DataFrame

print(df.head())2. NumPy: The Foundation of Numerical Computing

NumPy stands as the cornerstone of numerical computing in Python. It provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays efficiently. Whether you’re performing mathematical operations or statistical analysis, NumPy’s speed and versatility make it indispensable.

import numpy as np

# Create a NumPy array

arr = np.array([[1, 2, 3], [4, 5, 6]])

# Perform mathematical operations

print(np.mean(arr))3. Matplotlib: Visualize Your Insights with Ease

Matplotlib is a comprehensive plotting library that enables you to create a diverse range of static, animated, and interactive visualizations. From simple line plots to complex heatmaps and 3D plots, Matplotlib empowers data analysts to convey insights effectively and intuitively.

import matplotlib.pyplot as plt

# Create a simple line plot

plt.plot([1, 2, 3, 4], [1, 4, 9, 16])

plt.xlabel('X-axis')

plt.ylabel('Y-axis')

plt.title('Simple Line Plot')

plt.show()4. Seaborn: Beautify Your Visualizations with Ease

Seaborn is built on top of Matplotlib and offers a higher-level interface for creating attractive and informative statistical graphics. With its concise syntax and built-in themes, Seaborn simplifies the creation of complex visualizations, making data exploration and presentation a breeze.

import seaborn as sns

# Load a sample dataset

tips = sns.load_dataset('tips')

# Create a scatter plot

sns.scatterplot(x='total_bill', y='tip', data=tips)

plt.title('Scatter Plot of Total Bill vs Tip')

plt.show()

5. Scikit-learn: Unleash the Power of Machine Learning

Scikit-learn is a versatile machine learning library that provides tools for data mining, classification, regression, clustering, and more. With its user-friendly interface and extensive documentation, Scikit-learn empowers data analysts to build and deploy machine learning models with ease, facilitating predictive analytics and pattern recognition tasks.

from sklearn.linear_model import LinearRegression

from sklearn.datasets import load_boston

# Load the Boston Housing dataset

boston = load_boston()

X, y = boston.data, boston.target

# Fit a linear regression model

model = LinearRegression()

model.fit(X, y)

# Make predictions

predictions = model.predict(X)

6. Statsmodels: Dive Deep into Statistical Modeling

Statsmodels is a Python library for estimating and interpreting statistical models. Whether you’re conducting hypothesis tests, fitting regression models, or performing time series analysis, Statsmodels offers a comprehensive suite of tools for exploring and understanding data from a statistical perspective.

import statsmodels.api as sm

# Fit a linear regression model

X = sm.add_constant(X) # Add a constant term

model = sm.OLS(y, X)

results = model.fit()

# Print summary statistics

print(results.summary())

7. BeautifulSoup: Web Scraping Made Simple

BeautifulSoup is a powerful library for web scraping and parsing HTML and XML documents. With its intuitive syntax and robust features, BeautifulSoup simplifies the extraction of data from websites, enabling data analysts to collect and analyze data from diverse online sources effortlessly.

from bs4 import BeautifulSoup

import requests

# Fetch HTML content from a website

url = 'https://example.com'

response = requests.get(url)

html_content = response.text

# Parse HTML with BeautifulSoup

soup = BeautifulSoup(html_content, 'html.parser')

8. Requests: Simplifying HTTP Requests

Requests is a simple yet elegant library for making HTTP requests in Python. Whether you’re fetching data from APIs, downloading files, or interacting with web services, Requests streamlines the process of handling HTTP communication, allowing data analysts to retrieve data from external sources seamlessly.

import requests

# Make an HTTP GET request

response = requests.get('https://api.example.com/data')

# Print the response content

print(response.text)

9. SQLAlchemy: Master the Art of Database Interaction

SQLAlchemy is a comprehensive toolkit for working with SQL databases in Python. Whether you’re querying databases, performing CRUD operations, or managing database transactions, SQLAlchemy offers a flexible and expressive ORM (Object-Relational Mapping) framework that simplifies database interaction and management tasks.

from sqlalchemy import create_engine, MetaData, Table

# Create a SQLAlchemy engine

engine = create_engine('sqlite:///example.db')

# Reflect an existing database table

meta = MetaData()

table = Table('my_table', meta, autoload=True, autoload_with=engine)

# Query the database

connection = engine.connect()

result = connection.execute(table.select())

10. TensorFlow: Embrace the Power of Deep Learning

TensorFlow is an open-source machine learning framework developed by Google that specializes in deep learning tasks. With its flexible architecture and extensive ecosystem of tools and libraries, TensorFlow empowers data analysts to build and deploy deep learning models for a wide range of applications, from image recognition to natural language processing.

import tensorflow as tf

# Create a simple neural network model

model = tf.keras.Sequential([

tf.keras.layers.Dense(64, activation='relu', input_shape=(784,)),

tf.keras.layers.Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])In the dynamic landscape of data analysis, proficiency in Python libraries is paramount for unlocking the full potential of data-driven insights. By mastering the 10 essential libraries outlined in this article, data analysts can streamline workflows, derive actionable insights, and drive informed decision-making with confidence and precision. So, dive deep into the world of Python libraries, and embark on a journey towards data mastery and innovation.